观察数据

- housing.info()

- housing.describe()

- housing.hist(bins=50, figsize=(20,15)) # 连续数据 plt.show()

离散变量使用value_counts()观察:

housing[‘ocean_proximity’].value_counts()

分割数据为测试数据和训练数据

方法1 train_test_split

from sklearn.model_selection import train_test_split

train_set,test_set = train_test_split(housing,test_size=0.2,random_state=42)

方法2 *** StratifiedShuffleSplit

如考虑分层抽样,例如对收入中位数分层抽样(收入中位数对房屋价格比较重要属性)

对中位数分层,ceil取整,得到离散类别。大于5合并为5

housing[‘income_cat’]=np.ceil(housing[‘median_income’]/1.5)

housing[‘income_cat’].where(housing[‘income_cat’]<5,5.0,inplace=True)

使用sklearn StratifiedShuffleSplit***

from sklearn.model_selection import StratifiedShuffleSplit

split = StratifiedShuffleSplit(n_splits=1,test_size=0.2,random_state=42)

for train_index , test_index in split.split(housing,housing[‘income_cat’]):

strat_train_set = housing.loc[train_index]

strat_test_set = housing.loc[test_index]

def income_cat_proportions(data):

return data[“income_cat”].value_counts() / len(data)

train_set, test_set = train_test_split(housing, test_size=0.2, random_state=42)

验证分层抽样

compare_props = pd.DataFrame({

“Overall”: income_cat_proportions(housing),

“Stratified”: income_cat_proportions(strat_test_set),

“Random”: income_cat_proportions(test_set),

}).sort_index()

compare_props[“Rand. %error”] = 100 * compare_props[“Random”] / compare_props[“Overall”] - 100

compare_props[“Strat. %error”] = 100 * compare_props[“Stratified”] / compare_props[“Overall”] - 100

drop [‘income_cat’] column

for set_ in (strat_train_set, strat_test_set):

set_.drop(“income_cat”, axis=1, inplace=True)

使用图表查看相关性longitude and latitude

housing.plot(kind=‘scatter’,x=‘longitude’,y=‘latitude’,alpha=0.1)

加入颜色

housing.plot(kind=“scatter”, x=“longitude”, y=“latitude”, alpha=0.4,

s=housing[“population”]/100, label=“population”, figsize=(10,7),

c=“median_house_value”, cmap=plt.get_cmap(“jet”), colorbar=True,

sharex=False)

plt.legend()

寻找相关性

1.方法1 corr

corr_matrix = housing.corr()

corr_matrix[‘median_house_value’].sort_values(ascending=False)

- 方法2 scatter_matrix

from pandas.plotting import scatter_matrix

attribues = [‘median_house_value’,‘median_income’,‘total_rooms’,‘housing_median_age’]

scatter_matrix(housing[attribues],figsize=(12,8))

/

housing.plot(kind=‘scatter’,x=‘median_income’,y=‘median_house_value’,alpha=0.1)

数据分成label和data

housing = strat_train_set.drop(“median_house_value”, axis=1) # drop labels for training set

housing_labels = strat_train_set[“median_house_value”].copy()

查找null数据

sample_incomplete_rows = housing[housing.isnull().any(axis=1)].head()

sample_incomplete_rows

imputer方法填充

from sklearn.preprocessing import Imputer

imputer = Imputer(strategy=“median”)

housing_num = housing.drop(“ocean_proximity”, axis=1)# drop离散数据列

imputer.fit(housing_num)

imputer.statistics_

housing_num.median().values

X = imputer.transform(housing_num) //X is arry

housing_tr = pd.DataFrame(X, columns=housing_num.columns,

index = list(housing.index.values)) //conver X into dataframe with housing columns and indexes.

方法一 factorize方法进行离散数据编码化

housing_cat_encoded, housing_categories = housing_cat.factorize()

print(housing_cat_encoded[:10]) # encode将离散数据编号化

print(housing_cat[:10])

**方法二 hotencoder编码化 矩阵计算

from sklearn.preprocessing import OneHotEncoder

encoder = OneHotEncoder()

housing_cat_1hot = encoder.fit_transform(housing_cat_encoded.reshape(-1,1))

housing_cat_1hot**

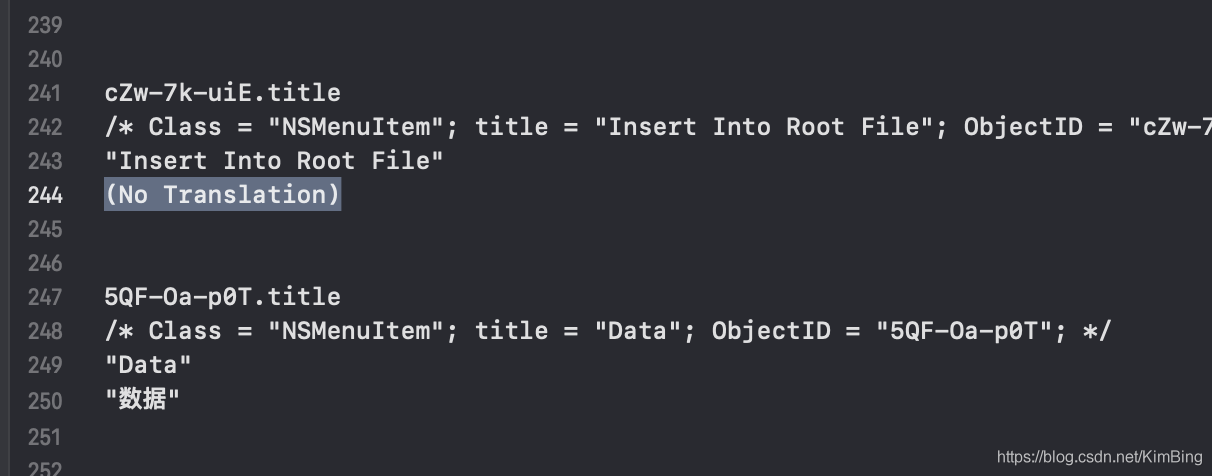

方法三 categoryencode

Definition of the CategoricalEncoder class, copied from PR #9151.

Just run this cell, or copy it to your code, do not try to understand it (yet).

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.utils import check_array

from sklearn.preprocessing import LabelEncoder

from scipy import sparse

class CategoricalEncoder(BaseEstimator, TransformerMixin):

“”“Encode categorical features as a numeric array.

The input to this transformer should be a matrix of integers or strings,

denoting the values taken on by categorical (discrete) features.

The features can be encoded using a one-hot aka one-of-K scheme

(encoding='onehot', the default) or converted to ordinal integers

(encoding='ordinal').

This encoding is needed for feeding categorical data to many scikit-learn

estimators, notably linear models and SVMs with the standard kernels.

Read more in the :ref:User Guide <preprocessing_categorical_features>.

Parameters

----------

encoding : str, ‘onehot’, ‘onehot-dense’ or ‘ordinal’

The type of encoding to use (default is ‘onehot’):

- ‘onehot’: encode the features using a one-hot aka one-of-K scheme

(or also called ‘dummy’ encoding). This creates a binary column for

each category and returns a sparse matrix.

- ‘onehot-dense’: the same as ‘onehot’ but returns a dense array

instead of a sparse matrix.

- ‘ordinal’: encode the features as ordinal integers. This results in

a single column of integers (0 to n_categories - 1) per feature.

categories : ‘auto’ or a list of lists/arrays of values.

Categories (unique values) per feature:

- ‘auto’ : Determine categories automatically from the training data.

- list : categories[i] holds the categories expected in the ith

column. The passed categories are sorted before encoding the data

(used categories can be found in the categories_ attribute).

dtype : number type, default np.float64

Desired dtype of output.

handle_unknown : ‘error’ (default) or ‘ignore’

Whether to raise an error or ignore if a unknown categorical feature is

present during transform (default is to raise). When this is parameter

is set to ‘ignore’ and an unknown category is encountered during

transform, the resulting one-hot encoded columns for this feature

will be all zeros.

Ignoring unknown categories is not supported for

encoding='ordinal'.

Attributes

----------

categories_ : list of arrays

The categories of each feature determined during fitting. When

categories were specified manually, this holds the sorted categories

(in order corresponding with output of transform).

Examples

--------

Given a dataset with three features and two samples, we let the encoder

find the maximum value per feature and transform the data to a binary

one-hot encoding.

>>> from sklearn.preprocessing import CategoricalEncoder

>>> enc = CategoricalEncoder(handle_unknown=‘ignore’)

>>> enc.fit([[0, 0, 3], [1, 1, 0], [0, 2, 1], [1, 0, 2]])

… # doctest: +ELLIPSIS

CategoricalEncoder(categories=‘auto’, dtype=<… ‘numpy.float64’>,

encoding=‘onehot’, handle_unknown=‘ignore’)

>>> enc.transform([[0, 1, 1], [1, 0, 4]]).toarray()

array([[ 1., 0., 0., 1., 0., 0., 1., 0., 0.],

[ 0., 1., 1., 0., 0., 0., 0., 0., 0.]])

See also

--------

sklearn.preprocessing.OneHotEncoder : performs a one-hot encoding of

integer ordinal features. The OneHotEncoder assumes that input

features take on values in the range [0, max(feature)] instead of

using the unique values.

sklearn.feature_extraction.DictVectorizer : performs a one-hot encoding of

dictionary items (also handles string-valued features).

sklearn.feature_extraction.FeatureHasher : performs an approximate one-hot

encoding of dictionary items or strings.

“””

def __init__(self, encoding='onehot', categories='auto', dtype=np.float64,

handle_unknown='error'):

self.encoding = encoding

self.categories = categories

self.dtype = dtype

self.handle_unknown = handle_unknown

def fit(self, X, y=None):

"""Fit the CategoricalEncoder to X.

Parameters

----------

X : array-like, shape [n_samples, n_feature]

The data to determine the categories of each feature.

Returns

-------

self

"""

if self.encoding not in ['onehot', 'onehot-dense', 'ordinal']:

template = ("encoding should be either 'onehot', 'onehot-dense' "

"or 'ordinal', got %s")

raise ValueError(template % self.handle_unknown)

if self.handle_unknown not in ['error', 'ignore']:

template = ("handle_unknown should be either 'error' or "

"'ignore', got %s")

raise ValueError(template % self.handle_unknown)

if self.encoding == 'ordinal' and self.handle_unknown == 'ignore':

raise ValueError("handle_unknown='ignore' is not supported for"

" encoding='ordinal'")

X = check_array(X, dtype=np.object, accept_sparse='csc', copy=True)

n_samples, n_features = X.shape

self._label_encoders_ = [LabelEncoder() for _ in range(n_features)]

for i in range(n_features):

le = self._label_encoders_[i]

Xi = X[:, i]

if self.categories == 'auto':

le.fit(Xi)

else:

valid_mask = np.in1d(Xi, self.categories[i])

if not np.all(valid_mask):

if self.handle_unknown == 'error':

diff = np.unique(Xi[~valid_mask])

msg = ("Found unknown categories {0} in column {1}"

" during fit".format(diff, i))

raise ValueError(msg)

le.classes_ = np.array(np.sort(self.categories[i]))

self.categories_ = [le.classes_ for le in self._label_encoders_]

return self

def transform(self, X):

"""Transform X using one-hot encoding.

Parameters

----------

X : array-like, shape [n_samples, n_features]

The data to encode.

Returns

-------

X_out : sparse matrix or a 2-d array

Transformed input.

"""

X = check_array(X, accept_sparse='csc', dtype=np.object, copy=True)

n_samples, n_features = X.shape

X_int = np.zeros_like(X, dtype=np.int)

X_mask = np.ones_like(X, dtype=np.bool)

for i in range(n_features):

valid_mask = np.in1d(X[:, i], self.categories_[i])

if not np.all(valid_mask):

if self.handle_unknown == 'error':

diff = np.unique(X[~valid_mask, i])

msg = ("Found unknown categories {0} in column {1}"

" during transform".format(diff, i))

raise ValueError(msg)

else:

# Set the problematic rows to an acceptable value and

# continue `The rows are marked `X_mask` and will be

# removed later.

X_mask[:, i] = valid_mask

X[:, i][~valid_mask] = self.categories_[i][0]

X_int[:, i] = self._label_encoders_[i].transform(X[:, i])

if self.encoding == 'ordinal':

return X_int.astype(self.dtype, copy=False)

mask = X_mask.ravel()

n_values = [cats.shape[0] for cats in self.categories_]

n_values = np.array([0] + n_values)

indices = np.cumsum(n_values)

column_indices = (X_int + indices[:-1]).ravel()[mask]

row_indices = np.repeat(np.arange(n_samples, dtype=np.int32),

n_features)[mask]

data = np.ones(n_samples * n_features)[mask]

out = sparse.csc_matrix((data, (row_indices, column_indices)),

shape=(n_samples, indices[-1]),

dtype=self.dtype).tocsr()

if self.encoding == 'onehot-dense':

return out.toarray()

else:

return out

cat_encoder = CategoricalEncoder(encoding=“onehot-dense”)

housing_cat_1hot = cat_encoder.fit_transform(housing_cat_reshaped)

housing_cat_1hot

dataframe 和 array之间转换

attr_adder = CombinedAttributesAdder(add_bedrooms_per_room=False)

housing_extra_attribs = attr_adder.transform(housing.values)

housing_extra_attribs = pd.DataFrame(housing_extra_attribs, columns=list(housing.columns)+[“rooms_per_household”, “population_per_household”])

housing_extra_attribs.head()